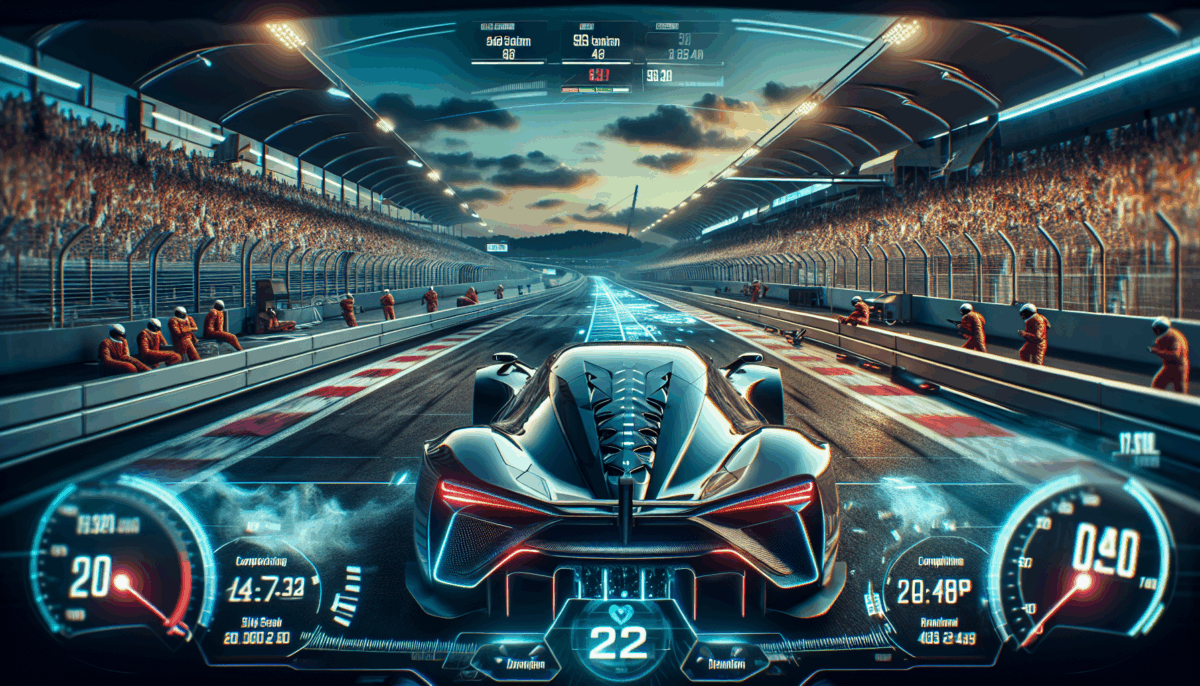

Feel the Excitement: Compete with the Polestar 5 in Gran Turismo 7 featuring Lap Times Comparable to Real Life

We independently review everything we recommend. When you buy through our links, we may earn a commission which is paid directly to our Australia-based writers, editors, and support staff. Thank you for your support!

Quick Overview

- Polestar collaborates with Gran Turismo to unveil the Polestar 5 in the digital racing arena.

- The game faithfully mirrors the Polestar 5’s genuine driving characteristics.

- This partnership draws on insights from professional racer Igor Fraga.

- Gran Turismo 7 will feature a unique Polestar time trial event with exclusive rewards.

- A documentary video about the collaboration will be available on Polestar’s YouTube channel.

Polestar 5 Joins Gran Turismo 7

The partnership between the Swedish electric performance company Polestar and the legendary racing game Gran Turismo is poised to revolutionize both the gaming and automotive industries. By including the Polestar 5 in Gran Turismo 7, players can enjoy a virtual representation of the car’s sophisticated design and performance attributes.

Precise Digital Representation

Polestar and Polyphony Digital, a subsidiary of Sony Interactive Entertainment, provided each other with extraordinary access to craft a highly precise digital version of the Polestar 5. This initiative guarantees that the in-game vehicle reflects the real-life driving experience, featuring dynamics and handling that actual drivers would identify.

Expertise from Professional Racing

Igor Fraga, a professional racer and sim-driver, played a crucial role in the project, contributing his knowledge to ensure a believable driving experience. His active participation in testing prototype models and offering feedback was vital to the game’s evolution.

Unique Events and Rewards

Gran Turismo 7 is set to feature a special Polestar time trial event, allowing players to compete for a rare chance to attend the World Finals of the 2025 GT World Series in Fukuoka, Japan. This event emphasizes the ongoing dedication to merging real and virtual experiences.

Documentary and Future Aspirations

A documentary film will be released on Polestar’s YouTube channel to shed more light on this groundbreaking partnership. This collaboration marks the beginning of Polestar’s venture into the digital landscape, with intentions to introduce more vehicles in the years ahead.

Conclusion

The alliance between Polestar and Gran Turismo represents a groundbreaking stride in incorporating electric vehicles into the gaming landscape. By delivering a realistic and captivating experience, both brands strive to illustrate the potential of electric cars while presenting gamers with an exhilarating new challenge.