NVIDIA’s Next-Gen Vera Rubin Platform Commences Full Production: Significant Enhancements in Computing, Energy Efficiency, and Water Consumption

We independently review everything we recommend. When you buy through our links, we may earn a commission which is paid directly to our Australia-based writers, editors, and support staff. Thank you for your support!

Quick Read

- NVIDIA unveils the Rubin platform, incorporating six newly coordinated chips.

- This platform provides a performance increase of 5x compared to the former generation.

- Rubin’s framework emphasizes extreme co-design along with efficiency.

- Essential elements comprise the Vera CPU, Rubin GPU, and NVLink 6 Switch.

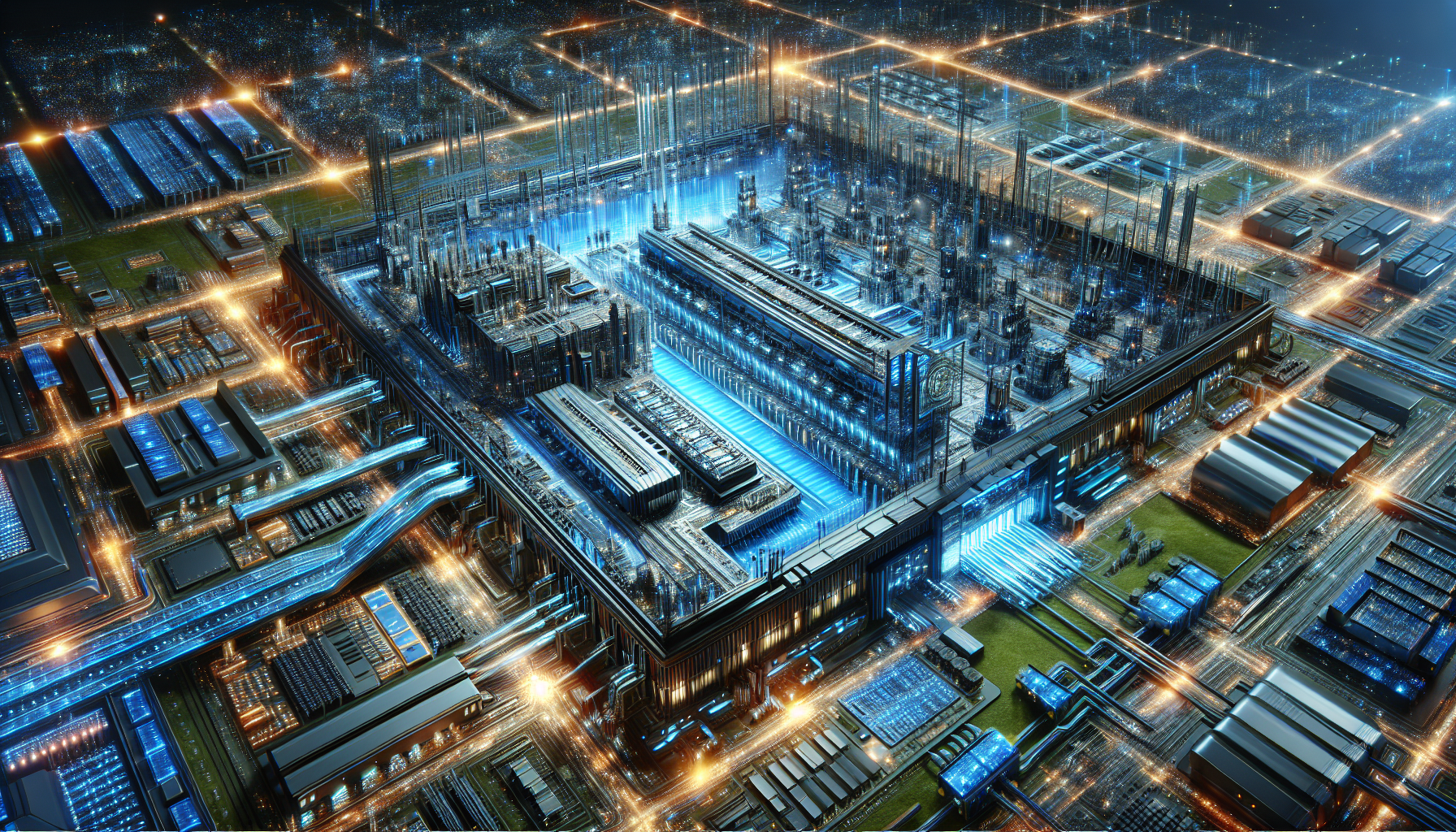

- Partnership with Microsoft on the “Fairwater” AI superfactories revealed.

- Rubin is set to drive next-gen AI with reduced costs and enhanced reliability.

- A wide launch of the platform is planned for the latter part of 2026.

The Six-Chip Approach to Extreme Co-Design

The Rubin platform is built upon NVIDIA’s extreme co-design approach, merging both hardware and software into a cohesive stack. This setup includes the Vera CPU, Rubin GPU, and the NVLink 6 Switch, providing uninterrupted data flow without delays. Alongside the ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet Switch, these components turn data centers into remarkably efficient AI factories, transforming raw data into actionable insights.

A Significant Advancement in Performance and Efficiency

The Rubin GPU’s performance is revolutionary, offering up to 5x the inference capability in comparison to the Blackwell generation. Notably, this was accomplished with just a 1.6x rise in transistor count, highlighting NVIDIA’s architectural creativity. This leads to a 10x reduction in the cost per inference token, greatly lowering the access threshold for businesses implementing advanced AI models.

A Closer Look at the Vera CPU and Rubin GPU

The Vera CPU, featuring tailored Olympus cores based on the Arm v9.2-A framework, manages the intricate data operations and logical processes essential for the Rubin GPUs. The Rubin GPU comes with eight layers of HBM4 memory, delivering 288GB of storage and 22 TB/s of data bandwidth, crucial for handling trillion-parameter models. HBM4 is essential for overcoming memory limitations, allowing for maximum throughput.

Empowering the Fairwater AI Superfactories

In partnership with Microsoft, NVIDIA is equipping the Fairwater AI superfactories with the Vera Rubin NVL72 rack-scale systems, scaling up to hundreds of thousands of Superchips. This collaboration seeks to establish the strongest AI infrastructure in the world, vital for training the upcoming generation of reasoning-capable models. Industry frontrunners like CoreWeave and Red Hat are gearing up to incorporate the Rubin platform into their frameworks.

The Season of Agentic AI and Reasoning

Rubin is crafted to facilitate agentic AI, empowering models to tackle complicated reasoning duties, perform research, and independently implement business strategies. The platform’s efficiency enables AI agents to function at a lower expense and higher dependability, making it viable for companies to continuously deploy AI workers without incurring substantial operational expenses.

Energy Efficiency and Networking Innovations

Despite the bolstered computational capacity, Rubin secures substantial gains in power efficiency. The Spectrum-6 Ethernet Switch and Spectrum-X systems provide a 5x enhancement in power efficiency. The implementation of advanced cooling technologies and photonics allows the NVL72 to sustain peak performance without thermal limitations. The BlueField-4 DPU further boosts system efficiency by offloading networking responsibilities.

Availability and Future Plans

NVIDIA intends for a wide rollout of the Rubin platform in the later part of 2026, continuing its annual cycle of innovations in architecture. While pricing will be customized, the emphasis lies in minimizing total ownership expenses by achieving greater performance with fewer GPUs. As the launch date nears, further details regarding the Olympus cores and NVLink 6 interconnect are expected.

Conclusion

NVIDIA’s latest Rubin platform signifies a noteworthy architectural leap, merging six distinct chips for unmatched AI performance and efficiency. By utilizing extreme co-design, the platform not only amplifies computational strength but also improves energy efficiency and reduces operation costs. Partnering with industry giants, NVIDIA is establishing a new benchmark for AI infrastructure, paving the path for agentic AI and reasoning-driven models.